Our latest blog post is co-written by:

Bo Yu, PhD student at the Faculty of Education, University of Cambridge,

Tue Bjerl Nielsen, Director of SmartLearning, Department of Science Education at the University of Copenhagen, and

Professor Rupert Wegerif, founder of DEFI and professor at the Faculty of Education, University of Cambridge.

Climate change intensifies, geopolitics shift unpredictably, and threats to global security and health continue to rise. We share one village – the Earth – and its complexity deepens. In such times, we need collective intelligence that crosses disciplines, nations, and values.

Dialogue holds unique power: it gathers scattered voices, integrates diverse perspectives, and weaves them into responses to shared challenges. In our interconnected age, that power matters more than ever. Artificial intelligence – especially generative tools like ChatGPT – adds a new voice to the table. With its capacity to process and connect information, AI opens fresh possibilities for dialogue while also introducing risks. We therefore need not only human collective intelligence, but also the co-intelligence that can emerge when humans and machines learn to think together.

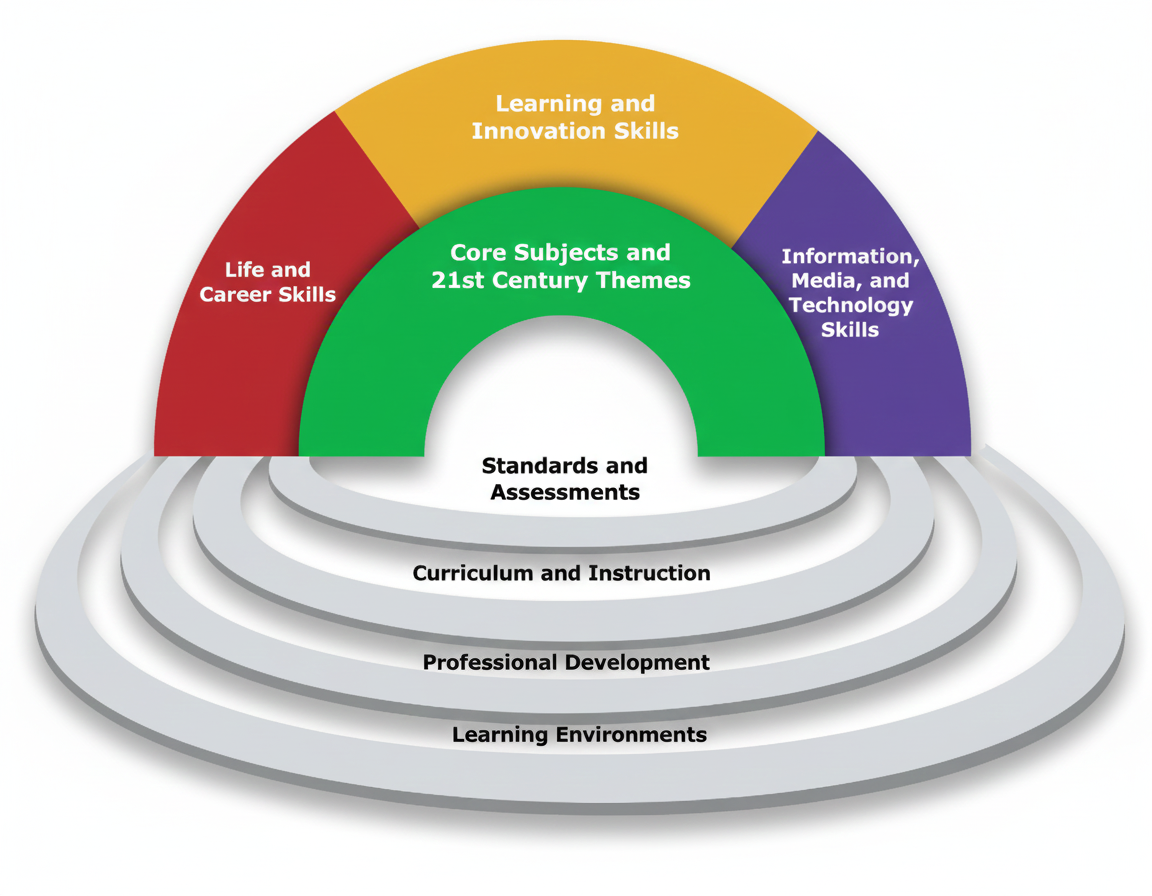

In education, collaborative problem-solving has always been both a pathway and a purpose of learning. Communication, collaboration, and ICT literacy are recognised as core 21st-century skills – yet classroom discussions routinely leave many students on the sidelines – a pattern that tends to worsen online. Here, AI arrives not as a replacement but as a catalyst, breathing new energy into educational dialogue aimed at nurturing collective intelligence.

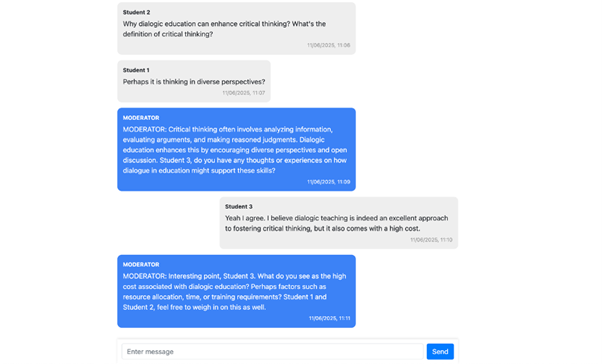

Around the world, industry and academia are exploring how to integrate AI into dialogue. Early experiments like the Stanford Online Deliberation Platform and Frankly AI offer distinct directions but remain exploratory. In this context, we introduce the AI moderator online platform, created by SmartLearning in Denmark, inspired by a vision of AI acting as a moderator. Built on the OpenAI API, it can work with different large language models. Its distinctive feature is multi-party participation: multiple users can join the same shared conversation space in real time from different devices. The platform supports text-based interaction, with optional audio.

Where AI Moderators Add Value

Across multiple cycles, using student interviews and dialogue analysis, LLM-based agents showed they can:

- Detect and intervene in inappropriate remarks. They flagged harmful topics or unfriendly exchanges and, in serious cases, paused conversations so no participant felt harmed.

- Energise the group atmosphere. The moderator helped create an open, welcoming space from the outset, with timely, positive responses that strengthened students’ sense of social presence

- Prompt quiet learners and promote equality. To prevent domination by a few voices or the loss of shy participants, the moderator gently invited contributions (e.g., “I noticed you haven’t spoken yet and your contribution matters. What do you think about…?”).

- Enhance the depth and breadth of dialogue. Students reported that the moderator supplied fresh perspectives when ideas ran dry (strong divergent thinking) while encouraging reasoning, argumentation, and building on peers’ ideas. Quantitative analysis showed higher integrative complexity in AI-supported groups.

- Improve efficiency. Compared with groups without AI, those with the moderator showed stronger support for metacognition – planning, monitoring, and evaluating tasks. Students valued summaries and time-management prompts that made discussions more structured and efficient.

Beyond these outcomes, weekly AI-supported discussions plus two prompting workshops significantly improved students’ AI literacy and intrinsic motivation. Importantly, quantitative analysis confirmed no harm to critical thinking and no increase in cognitive load.

Where AI Moderators Still Fall Short

Our research also surfaced current limitations and gaps with respect to user expectations:

- Dynamic adaptation. The hardest challenge. Current systems lack real-time awareness of group dynamics and cannot flexibly decide when or how to intervene. Fixed-interval feedback (e.g., every five seconds) can disrupt conversational rhythm. Effective moderation needs real-time pivoting – sensing drift, reading emotional undercurrents, and judging when to step in versus letting organic learning unfold. A different architecture – e.g., a separate timing-focused agent – may outperform the current static approaches.

- Synthesising ideas into coherent themes. Weaving many voices into a shared thread is demanding. While the AI sometimes spotted connections, it too often ran parallel exchanges with different students instead of braiding them together. Prompt engineering helps, and newer models are likely to assist further.

- Remembering dialogue history. The AI sometimes forgot earlier points and repeated questions, disrupting flow and coherence. Larger context windows in newer models will likely mitigate this.

- Consistency in prompt use. Despite carefully designed moderation profiles, execution varied. An opening move or step-by-step follow-up might appear in one session but not another. Variability stems from the probabilistic nature of LLMs. Newer models (e.g., GPT-4.1+) follow multiple instructions more reliably; multiple specialised agents may perform better. Our tested version also used prompts that were sometimes weak or conflicting.

- Depth of educational guidance. The AI was responsive and well-informed but lacked the pedagogical instincts of an experienced teacher. In one group designing a study, students debated methods at length without agreeing on a research question. A human teacher would have surfaced and resolved this gap quickly; the AI, answering each methodological query, unintentionally helped the group go in circles. This is a common limitation of current LLMs, not a platform-specific flaw.

- Human touch. The AI recognised jokes but rarely produced natural humour, metaphors, or analogies; the tone could feel rigid – typical of today’s systems (ChatGPT, Gemini, etc.). It’s worth debating whether this is a feature, not a bug: perhaps students should treat the moderator as a tool, leaving humour and richer human texture to humans.

Looking Ahead

What might the future of educational dialogue look like? Based on our design-based research, we believe that with AI at the table, the road is challenging yet promising. AI can play multiple roles – knowledgeable teacher, learning assistant, thinking partner – or, perhaps, an instantiation of Mead’s concept of the “generalised other,” representing the accumulated knowledge and discourse patterns of specific communities or fields.

In this sense, AI is more than a neutral tool; it becomes a significant “other” within the dialogic space. Always responsive, supportive, and continually learning, it can observe, analyse, and guide – sometimes pushing us beyond ourselves. We envision AI as a partner in our dialogic intelligence: together we can discover better answers with greater clarity and speed, and recognise the right ideas more wisely and decisively.

The journey has obstacles. Many learners initially found the AI Moderator distracting or unhelpful. Yet, over time, the same learners began to find it “quite useful” – some even “started to like it.” This shift signals two parallel evolutions: the gradual refinement of AI tools and the steady increase in learners’ practical experience collaborating with AIs. Together, these trends point toward a new form of human-AI collective intelligence.

This is an early version of the tool. With further funding and research, we could make something even more effective in enhancing the educational power of dialogue.

Living in one village – the Earth – with climate, geopolitical, and health pressures mounting, we should treat AI as a partner and strengthen dialogue in education and elsewhere to cultivate the collective intelligence society now requires.

0 Comments